A step by step guide to switch to gpt-4o safely with Helicone

Scott Nguyen· May 14, 2024

Scott Nguyen· May 14, 2024With the introduction of GPT-4o, OpenAI's flagship model that is multimodal and multilingual, you may be considering switching from your existing model to GPT-4o. However, switching models can be risky and time-consuming. You may be worried about the impact on your users, the quality of the new model, and the time it takes to switch over.

Using Helicone's experiments feature, you can safely and easily switch over to GPT-4o. In this article, we'll show you how to regression test, compare, and switch models using Helicone's experiments feature.

Prerequisites

- Have a Helicone account. If you don't have one, you can sign up for free here.

- Have at least 10 logs in your Helicone project.

- Use Helicone's prompt templating feature, learn more about that here.

Let's get started

For this tutorial, we'll use a simple storytelling app that generates stories based on user's scene input.

Step 1. Use the code snippet below to log your first Helicone prompt

import { hprompt } from "@helicone/helicone";

const chatCompletion = await openai.chat.completions.create(

{

model: "gpt-3.5-turbo",

max_tokens: 128,

messages: [

{

role: "user",

content:

"You are a magical storyteller that builds extravagant stories based in medieval times.",

},

{

role: "assistant",

content: "Great! What scene do you want to build a story with?",

},

{

role: "user",

// Add hprompt to any string, and nest any variable in additional brackets `{}`

content: hprompt`Write a short story about ${{ character }}`,

},

],

},

{

// Add Prompt Id Header and Helicone Auth Header

headers: {

"Helicone-Prompt-Id": "Storyweaver",

"Helicone-Auth": process.env.HELICONE_API_KEY,

},

}

);

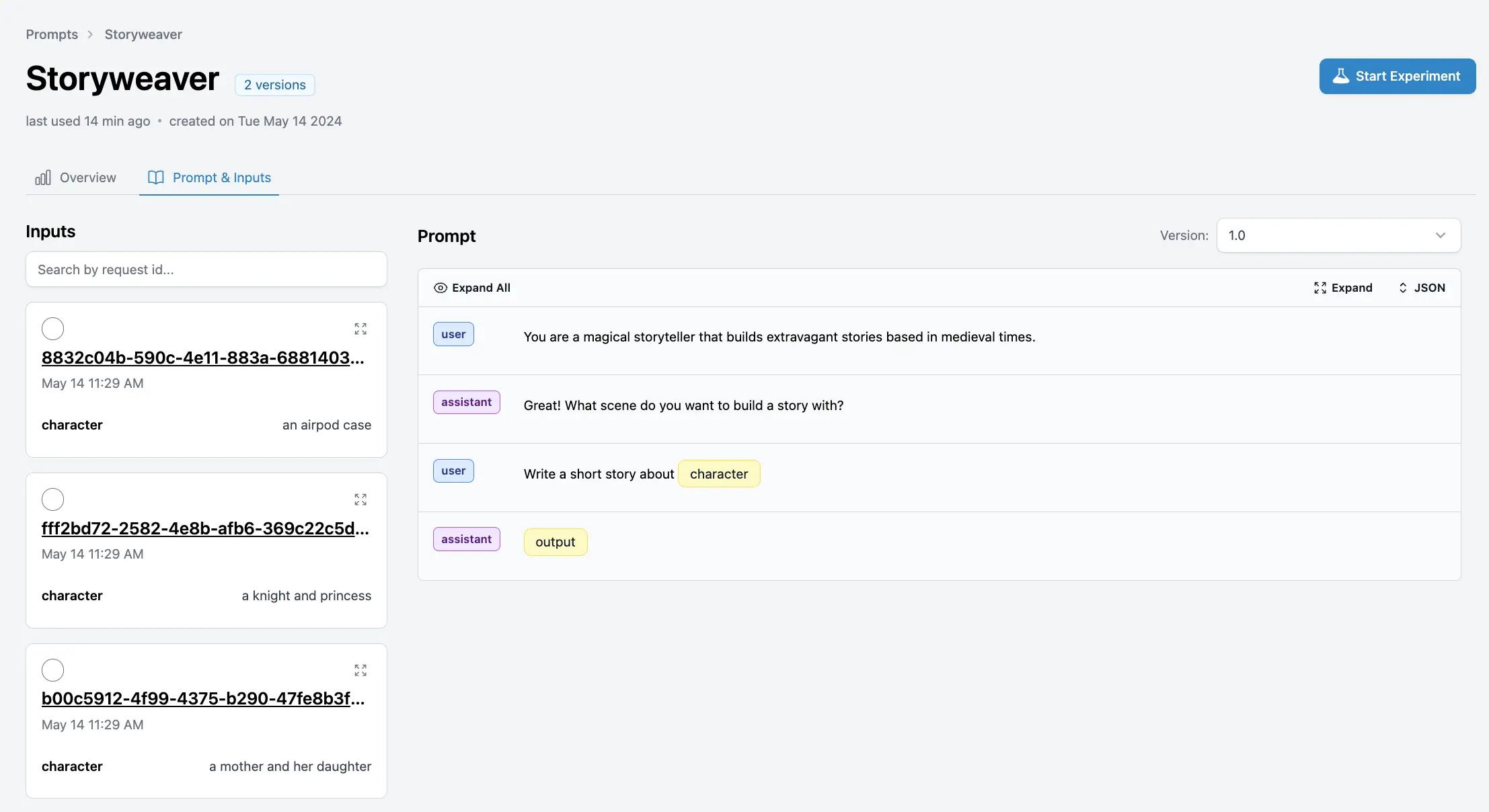

Step 2. Navigate to the prompts page to view your Storyweaver prompt

You should see this inside of your /prompts page in Helicone:

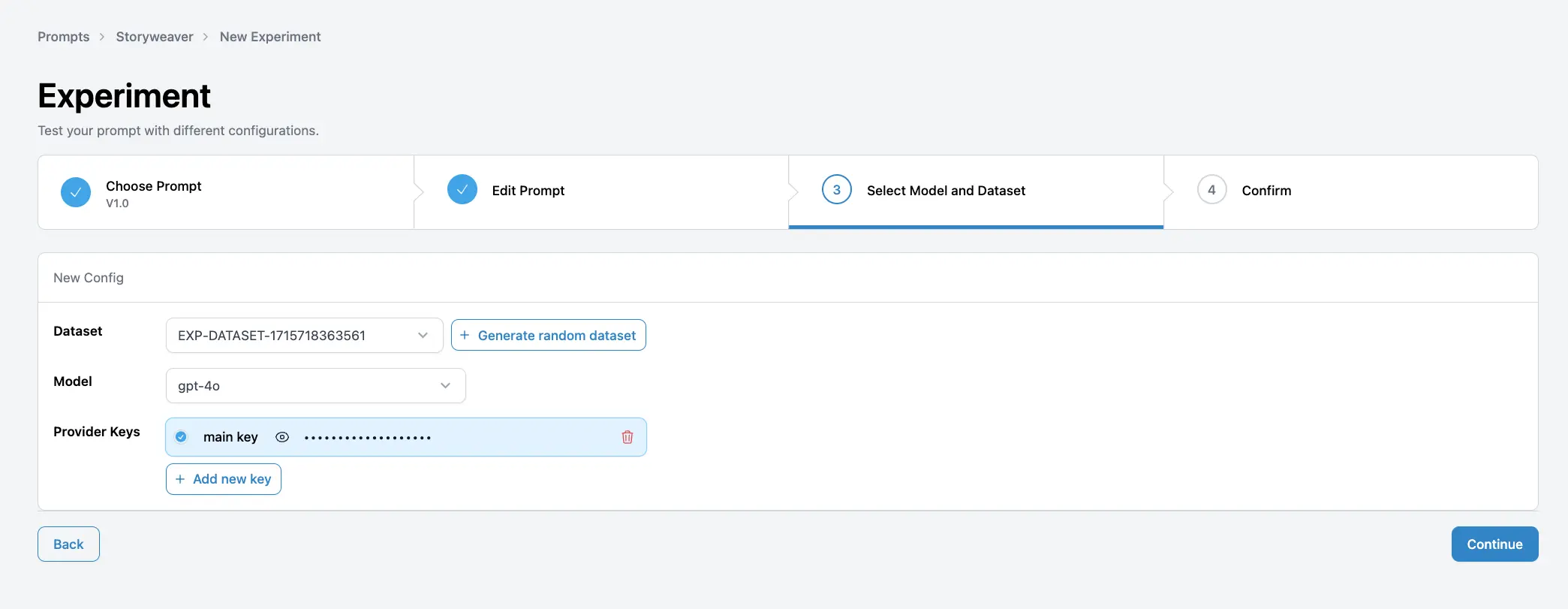

Step 3. Go through the New Experiment flow and select the gpt-4o model

Configure your experiment by selecting the dataset, model, and prompt you want to test. In this case, we will select the gpt-4o model.

Note: You can update the prompt as well as the model if you want to test different combinations.

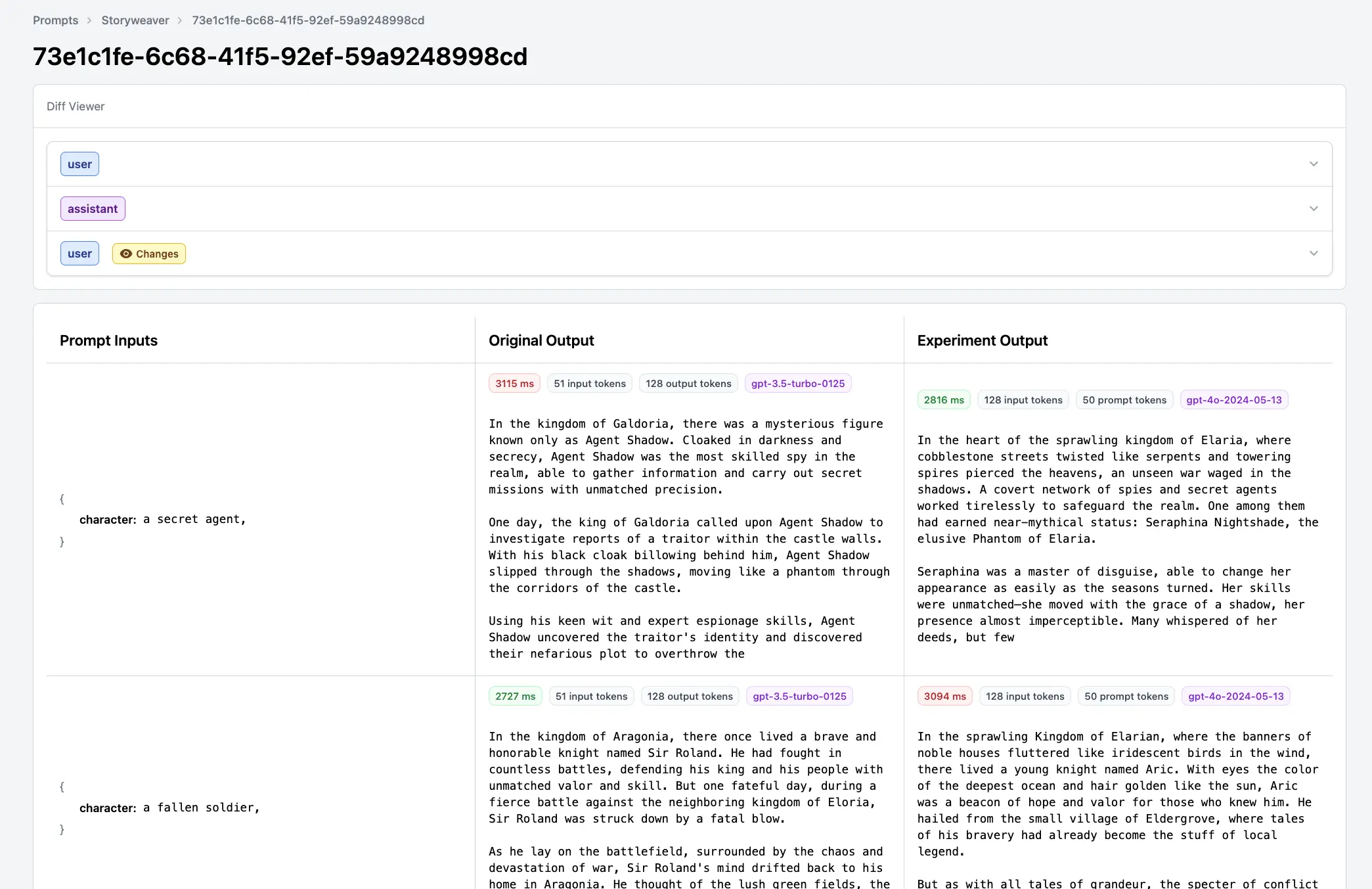

Step 4. Compare the results of the gpt-4o model with your existing model

You can now visualize the differences between the two models and decide if you want to switch over to gpt-4o.

Note: You can also score the outputs based on your criteria and compare the models based on the scores.

That's it!

With Helicone, you can safely experiment using production data and prompts without the worry of impacting your users.

We are excited to see how you use Helicone's experiments feature in production and would love to hear your feedback. Some of our customers are using experiments today to:

- Create, test, and compare new versions of their prompts.

- Regression test new models before switching over.

- Attach experiment id outputs to their pull requests for easy review.

If you have any questions or need help getting started, feel free to reach out to us here. Happy building!

Questions or feedback?

Are the information out of date? Please raise an issue or contact us, we'd love to hear from you!